JoyFeed: Journey to Success with HPE at AngelHack SF

This post is also published on HPE Haven OnDemand’s official blog here.

The sky was dark and gloomy when we left Sunnyvale for San Francisco; halfway through our journey on US-101, the rain started pouring down. We were on our way to AngelHack’s 9th Global Hackathon – an event we were so hyped about – but the rain was dampening our mood. We parked a block away, and by the time we walked to Weebly’s state-of-the-art headquarters where the event was held, we were soaking wet and deeply needed some positivity.

I would imagine that, if I were not with a friend but alone by myself, I would sit in a corner, pull out my phone and launch Twitter.

But what if the first post I see is a totally depressing news? I just wanted to make myself feel better. Give me a break.

Well, that is exactly why we built JoyFeed.

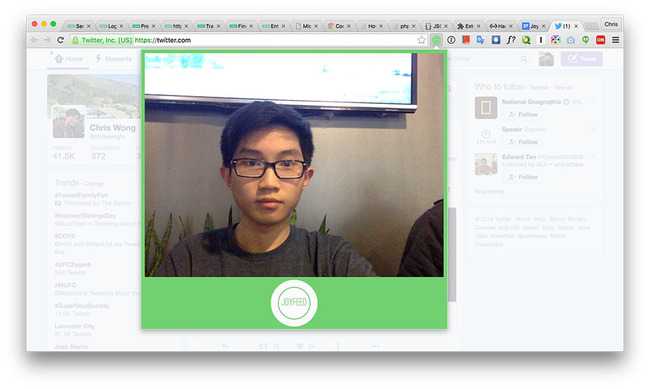

JoyFeed is a browser extension that analyzes users’ facial expression and filters their social feed based on their emotion and each post’s sentimental value. Using HPE Haven OnDemand’s APIs, we were able to seamlessly develop JoyFeed from a mere sketch into a fully working product.

The Idea #

Good ideas often come up subconsciously. Eagerly wanting to hack with Haven OnDemand’s services, we looked at the list of available APIs and tried to brainstorm possible combinations. A sudden inspiration sparked both of us when we came across their Sentiment Analysis API that was prominently featured on their promotional flyer. We both agreed that it would be a cool feature to integrate with, and we thought that machine learning would be a fantastic companion too.

Well, how would machine learning fit into sentiment analysis? Still feeling pensive due to the weather, we both wanted to make the world a happier place to be in. We first thought of an app that runs every post on a social feed through Haven OnDemand’s Sentiment Analysis API and hides every single post that is deemed negative, but quickly dismissed the idea after realizing that it would be the equivalent of censorship. But what if an additional layer of validation is done before analyzing the posts, just so it would hide the posts only to users who really need it? Turns out that Haven OnDemand’s Predict API would be the perfect tool for this, as we could train the machine to predict what the user is currently feeling!

The Game Plan #

We quickly put our ideas into papers and sketched the workflow. In order to train the machine to predict the user’s emotion, we used Microsoft Cognitive Services’ Emotion API to analyze facial expressions. The API would then return a set of values across multiple categories including – but not limited to – Happiness, Sadness, Fear and Disgust. We ran approximately 50 faces through the API and associated the values with either “positive”, “neutral” or “negative”, depending on what we thought the person in the picture is feeling. These data were then gathered into a CSV file and used to train the machine learning model using Haven OnDemand’s Train Prediction API. Copying and pasting seven values for each photo for 50 times seemed like a heavy task, so we actually wrote a parser in Java that automatically converts the values into a line of string to speed up the data collection.

The most difficult and complicated part of our hack was parsing the posts and filtering them. We initially planned to build a mobile app and use iOS’ Camera API and Twitter’s Streaming API to show a list of tweets post-filter. The reason we chose Twitter for our demo was due to its limited post types, which made parsing significantly easier, and its 140-character limit, which made the analysis and response marginally quicker. However, we pivoted two hours after we started working on it, realizing that it would make more sense for JoyFeed to be built in the form of a browser extension that acts on Twitter’s timeline directly instead of a mobile app. We scrapped everything we wrote, and we started all over again. Apparently, Chrome did not allow extensions to access webcams directly, but we found a way to get around that. We were able to use HTML’s getUserMedia() function to access the webcam and used drawImage() to capture an image when the user clicks the “JoyFeed” button, then converting it into binary using toDataURL(). The photo would then be uploaded to Imgur using their public API, and the image link would subsequently be sent to Microsoft Cognitive Services to perform emotion analysis. Once it returns us the set of values, we would send it to Haven OnDemand’s Predict API and it would tell us if the user is feeling positive, neutral or negative.

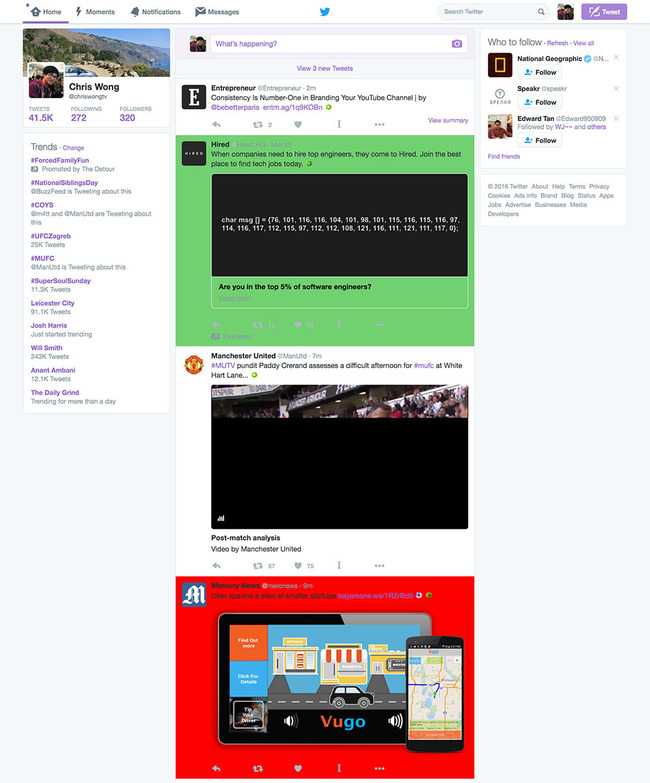

Now that we know how the user is feeling, it’s time to filter out the negative posts. Before we can run the posts – in this case, tweets – through Haven OnDemand’s Sentiment Analysis API, we have to parse the content from every tweet. We used Chrome Extensions’ content_scripts function to access the DOM, where all the front-end elements are located, and used JavaScript’s getElementsByClassName() to get every single tweet individually using a shared CSS class. Once we have the tweets, we would navigate into its body and grab the text, then send it to HPE’s Sentiment Analysis API and find out if it’s classified as positive, neutral or negative. If the user is feeling unhappy, the extension would hide every tweet that is categorized as negative; although for demonstration purposes, we would highlight negative tweet(s) in red, and put a button in place that would hide all of these tweets in one click.

The Pitch #

After countless Red Bulls, pizza breaks and pool games, a fully functioning prototype was built. Fortunately, we finished JoyFeed with few hours remaining on the clock, so we spent a great deal of time to fine-tune our pitch, recorded a video walkthrough and wrote our hackathon submission. Hours later, when the demo period finally begun, we went to HPE’s booth and was the first team to pitch to the judges. Thankfully, it went smooth, with Haven OnDemand’s servers responding swiftly and accurately to our API requests.

The moment of announcing the winners had arrived; HPE’s challenge winners were the last to be announced, so we had to wait for the results anxiously. Joy filled our mind as we heard JoyFeed named as the winner of HPE’s challenge – no pun intended – and we went on the stage to collect our prizes from HPE’s representatives. At the end of the day, we brought home GoPros, happiness and satisfaction, and they are truly rewarding.

Final Thoughts #

We aimed for it, we worked for it, and we earned it. This is simply one of those days when hard work is well rewarded with something we strive for. All in all, we’d like to end this piece by expressing our gratitude and appreciation to HPE and AngelHack for the unforgettable opportunity and experience. If you would like to try it yourself or check out our code, we have posted detailed instructions on configuring, installing and using it on JoyFeed’s GitHub repository linked below. Feel free to try it out yourself and let us know what you think!